React Server Components, Server Actions, HTMX, Hotwire, Livewire--all these new shiny things are pushed on us as the better and correct way of doing things. But the truth is, they are not better, they are just more tools that we can choose to use or choose to ignore.

It wasn't that long ago when single page applications (SPAs) were the obsession. The focus for web devs was on making the web feel native by writing large JavaScript applications that render everything in the browser, Client-side rendering (CSR).

The mid 2010s were the golden years for this. Apple had killed flash, browsers were insanely performant, and SPA JavaScript frameworks just started popping up everywhere, completely changing the perception of what web apps could be.

But those days are now behind us. We still have way too many JavaScript frameworks popping up, but the community has started to shift their focus back to rendering everything on the server, Server Side Rendering (SSR). Fine, rendering on the server has worked since the 90s, it works now, and it will work in the future.

The issue I have with this movement is that JavaScript and CSR are becoming the villain. The new SSR way of doing things is being presented and marketed to us as "the correct" way, better than the old SPA way.

Instead of using React to render everything on the client, we're told to use it with Next.js and render on the server. Instead of using JavaScript, we're encouraged to use abstractions like HTMX. Instead of JSON, we're encouraged to send HTML over the wire. Sure, these are all different approaches, but can you really tell me that any one of these approaches is objectively better than rendering everything in the browser?

A Bit of Web History

In late 1990, the World Wide Web was born, and for the first time, people were able to make an HTTP request from a web browser to fetch an HTML document. Initially this was only used by the people of CERN to fetch phone numbers from CERN phone book, but the creator of the web, Sir Tim, only used that as a starting point to get people on board with his drastic idea of a global, hyperlinked information system.

This idea always revolved around URLs and hyperlinked resources. Someone should be able to interact with some resource using a URL, then interact with related, linked resources. Everything on the web could be connected to something else, things connected to other things. This was 34 years ago, so HTML and HTTP were some good defaults for implementing this, but never a requirement.

By 1992, the ViolaWWW web browser, created by Pei Wei, was the default web browser used at CERN. The reason this browser is so special is because it was one of the first web browsers to allow client-side scripting using a custom viola language. We take this for granted today with the epic rise of JavaScript, but it's kind of a big deal to think about how little time it took for someone to create a web browser that allowed client-side scripting, and how quickly it was accepted.

Over the years, there has always been a push to put more client-side interactivity into the browsers using technologies like Java Applets, Flash, VBScript, and of course, JavaScript. As of 2017, you can potentially run any language in the browser using WASM.

Computers have come a long way and are much more powerful than they used to be. Web browsers have evolved and turned into super apps capable of much more than most people know. You can create a static page with HTML and CSS, or create an amazingly complex application using JavaScript. Technology has advanced, and web developers have the ability to offer so much more to people over the World Wide Web.

Server Side Rendering

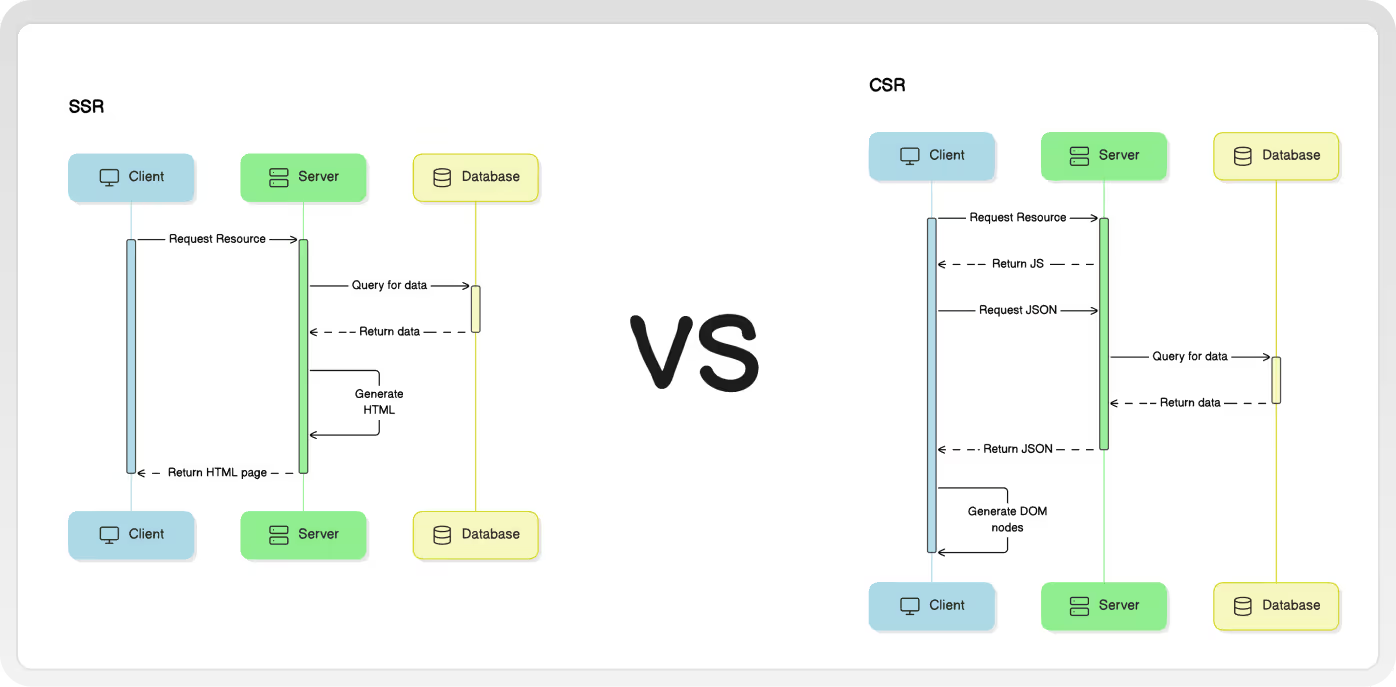

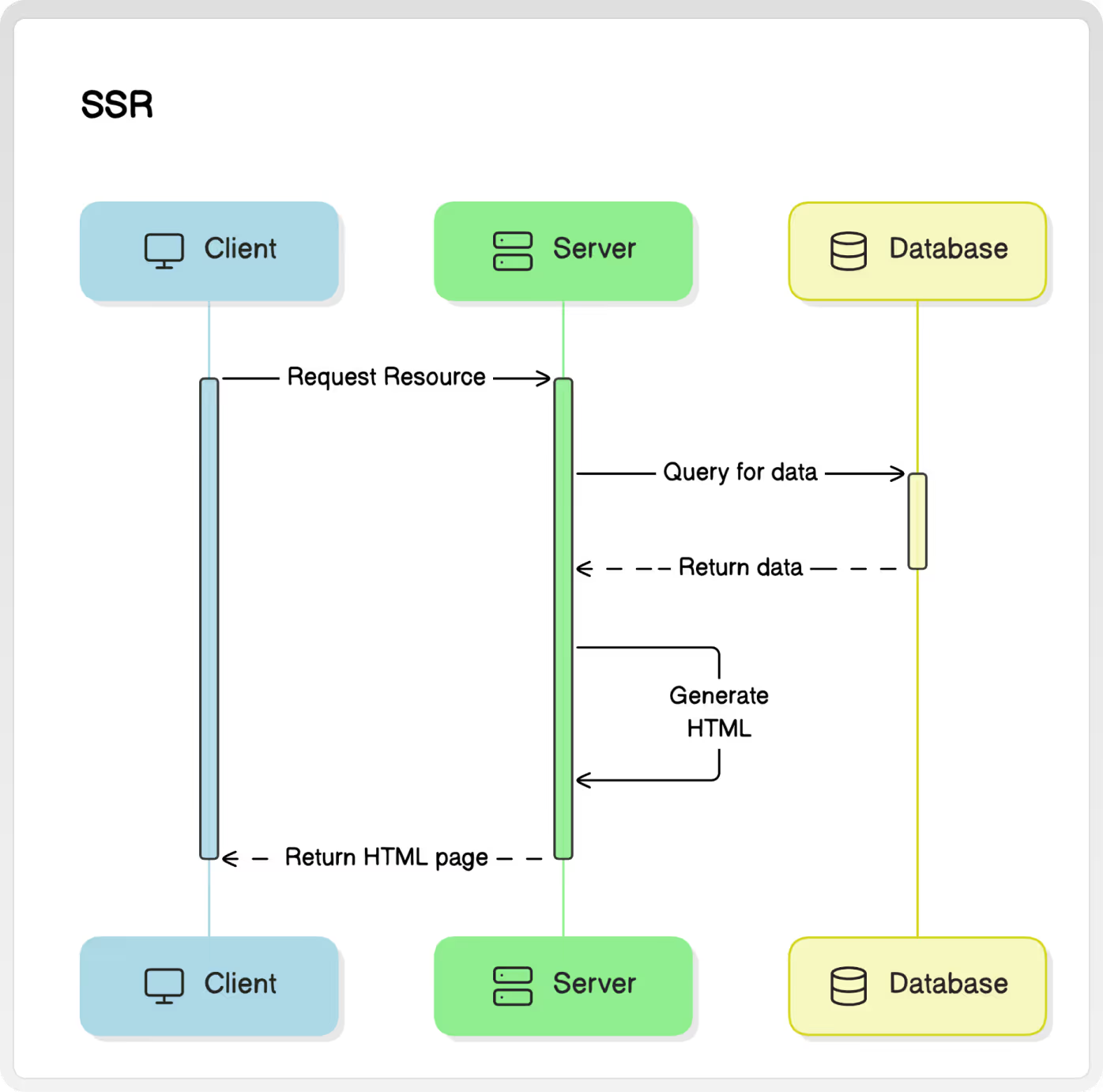

This model treats the browser as a thin client application that's only purpose is to view HTML documents. The browser requests HTML from the server every time there's a state change, so a new page is completely reconstructed every time something updates.

Server side rendering is probably the simplest approach to building any web app. Make a request to your server, your server can talk to a database or other services, then the server will send back the HTML page.

The benefit here is that there's no complexity in the browser because all application logic exists on a server. Developers are free to choose their favorite language and framework, and really focus on writing good server side code.

The downside to this approach is the slow response time to any user interaction, it's a bad user experience. Responding to a user interaction should always be immediate, but the browser has to request a brand new HTML page from the server before the user gets any feedback.

For page navigation, this only kind of sucks, but imagine how something like google maps or calendar would work in this environment. Moving the map or changing the date of a calendar entry would require some sort of button click or form submission, and the page would completely have to reload before you see any updates.

So how can we make the user experience a little bit better? Add a little bit of JavaScript.

Server Side Rendering with Some JavaScript

If we send just a little bit of JavaScript to the client, we can add interactivity to the web page, and improve the user experience. A calendar event can be dragged around the screen from one day to another, while sending an HTTP request to the server to update the entry in the database.

From the user perspective everything feels snappy and fast, which is a really good thing for a web app. The downside is that we've added more complexity, more code to maintain, and more places where things can break.

But maybe the improved user experience is worth it, so let's see what happens if we go all in on user experience.

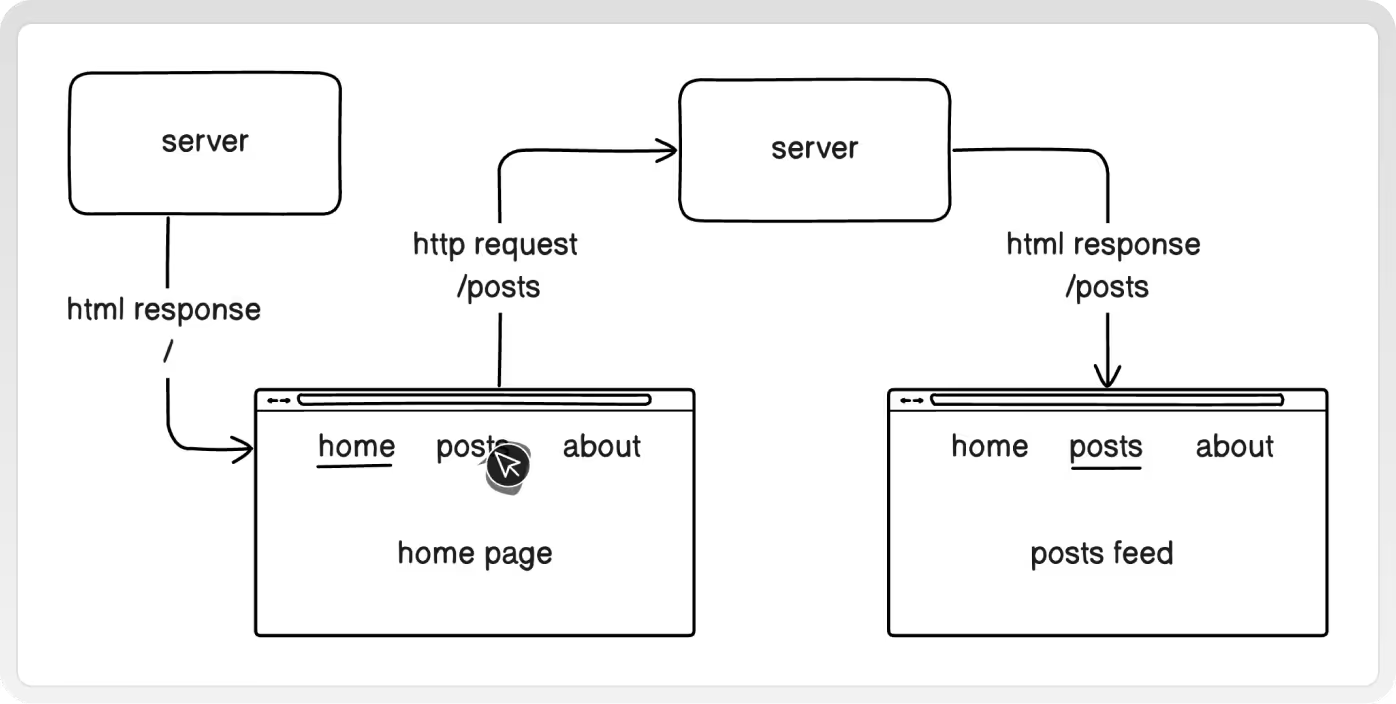

Client Side Rendering & Single Page Applications

This is where things get more interesting because your web app starts to feel like a native app. With a native desktop or mobile app, the user downloads the entire application onto their device before using it. If the app needs data from the web, it can make an HTTP request, probably for some JSON, and the native app can handle the response however it wants. The developers of the app can create any user experience they want. There is a lot of power here, but with great power...

So let's apply this idea to web apps. The browser can request a large JavaScript bundle up front, this is the app that runs in the browser just like a native app. Then every other request to the server is just an asynchronous request in the background that can be handled by the client side JavaScript.

There's an up front cost of getting the initial JavaScript, but every single action the user takes within the app can be immediate. None of the UI or logic is dependent on another network request, so updating the UI and navigating around the app can always happen instantly. The user could even continue to use the web app with no internet connection.

This is ultimate control over the user experience. The app feels fast, and the user is happy.

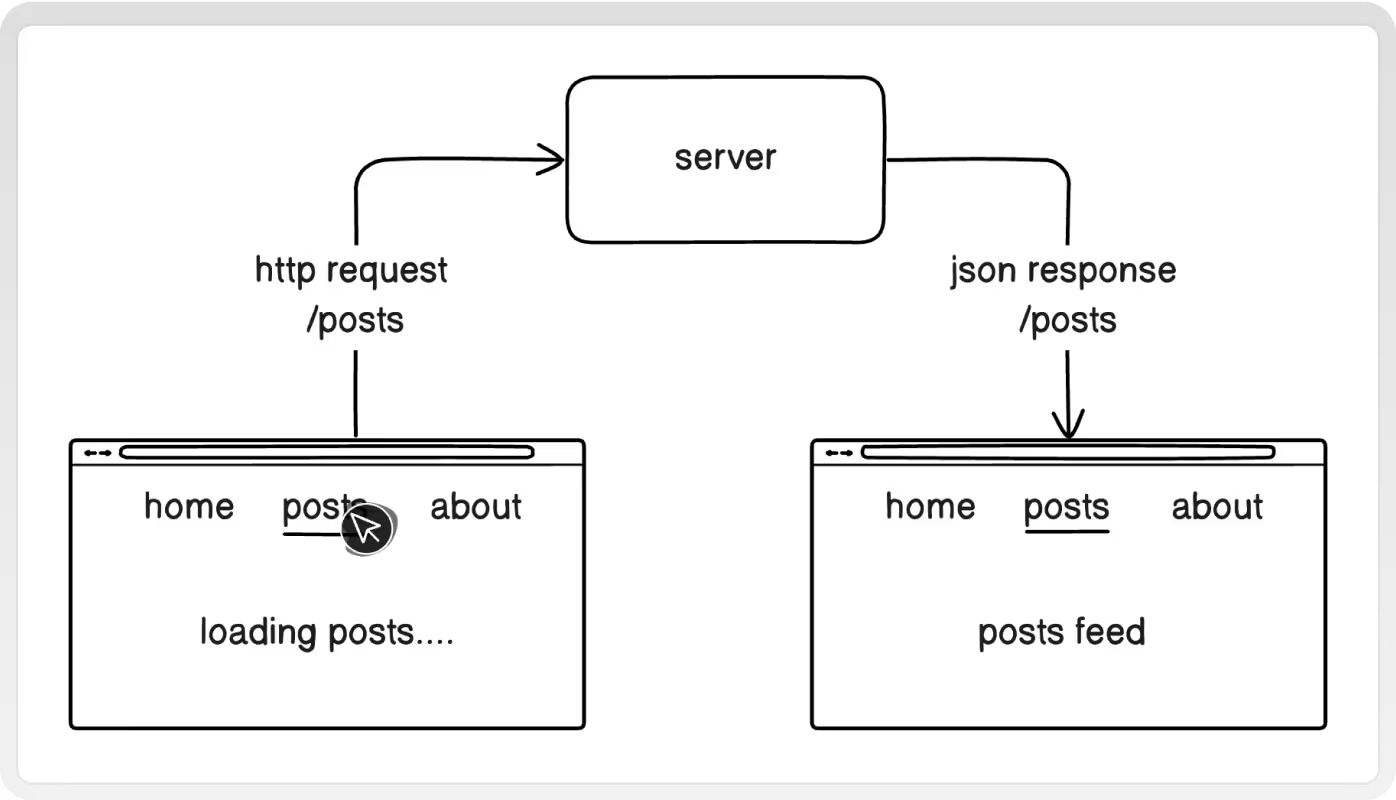

Sure this is great for highly interactive apps like calendars and maps, but what about "normal" web apps? Let's take a use case that applies to any application. When the user clicks navigation link to navigate to a new page, let's say the user clicks the posts link, what should the experience be?

Navigating to a new page usually requires requesting some information from the server, so we have a network request to deal with, which can take some amount of time. In an SSR app, the user clicks the link and, and for a moment it seems like nothing happens. The user just waits for the network request to complete, then a new page appears.

But we have complete control over the user experience, let's try something different. The user clicks the posts link and we immediately, without hesitation, do the following:

- Underline the posts link

- Update the url in the browser to

/posts - Show "loading posts...." on the page

- Send a network request to grab the posts data from the server

And when the server responds, update the UI on the client to show the posts.

If a user clicks a link to move to a new page, the app should move to the new page straight away. All the little details that the user expects to see like underlining the link they clicked, changing the url path to reflect the new location, showing loading state, this should all happen immediately to avoid feeling janky. Don't wait for anything--immediate feedback is a good experience.

Here's an example of an expense tracker app built with react. Submitting a new expense can take some time, especially if the submission is over a slower network connection or if there was a file upload involved too. But everything here is immediate:

The moment that the user submits an expense, without delay, the app redirects to the /expenses page where the new entry will live with the other expenses. The new entry has not yet been saved to the database, so there are some loading skeletons to indicate that. Once the request is successful, those just disappear to indicate it was saved, and the page is in it's normally loaded state, showing all expenses.

While this is all happening, the user is free to navigate to any other page in the app. They are not just stuck on the expenses page waiting for content to load. They can delete expenses or create another expense, or anything really. You have control to create any experience specifically for your app.

You could also take this one step further and start implementing some local-first concepts where you make your app work, even when it's not connected to the internet. Imagine something like a note-taking app. A user could be offline and open the cached version of the app. They could take notes, use the app completely offline, and the user's data can be stored in the browser. When the user reconnects to the internet, all their data can synchronize with the server, and the user doesn't just keeps the same experience. There's a lot of power and freedom when you start treating your web app more like a native app.

The point of all this is to demonstrate that you have the most control over the experience when you build an SPA because UI updates aren't dependent on a network request. This makes too much sense when you're building a highly interactive app, but there's no reason your basic blog site couldn't be an SPA. It all depends on how much control you want over the user experience.

Now let's talk about some of the downsides to this approach.

Complexity

Of course this is going to add much more complexity to your app. You now have to maintain a client side SPA and a server side app. You end up with two apps instead of one, maybe in two separate languages and sometimes two completely separate dev teams.

Remember that you have the power to create an amazing user experience, but you also have the power to create pure jank. So you need to invest time and energy into making the app work well.

Initial bundle size

With an SPA, you have to download the initial javascript which can be large and maybe take a while. You sacrifice the time of the very first request to make all other interactions in your app much better.

For a lot of highly interactive apps, users are willing to wait a few more milliseconds for the initial page, as long as that results in a much nicer user experience for the remainder of the application's use. If a user is going to stick around and use your web app for a while, it's worth the tradeoff. If a user is going to visit one page and leave, then you can optimize for that by not loading in any javascript.

There are some things you can do to improve this initial performance. You can put your JavaScript on a CDN, like CloudFront, so the javascript is really close to the user and downloads are faster. And you can split your JavaScript into multiple files and download just enough JavaScript to load the current page, then download the rest of the JavaScript in the background.

Also, a lot of this JavaScript gets cached in the browser, so if the user closes the browser window and comes back to your web app, the JavaScript will still be there and won't need to be downloaded again.

SEO

To actually see any meaningful content, the browser has to first download JavaScript, then run the JavaScript to render a page. Google's search indexing robots have to do the same, which means they have to work harder to index your page. This may or may not have some negative implications on search ranking, it's kind of hard to tell at this point, and no one knows how google actually works.

If SEO is worse for SPAs, here's two points I want to bring up:

- Optimize for your end users, not for google robots.

- If SEO is the most important, you can serve pre rendered content to robots and a normal SPA to real users. Do this manually with an if statement on your server, or use a service like Prerender.

Tools

The current state of tooling for frontend apps is amazing, it's never been a better time to work on SPAs, but that hasn't always been the case. People still have a sour taste in their mouth about the way it used to be, but it's much better now, I promise.

One thing that's challenging is the overwhelming amount of choice. There's no clear direction on which tools, libraries, frameworks to use. It's a bit of a paradox of choice situation that seems to be uniquely out-of-hand for frontend devs.

You will need a:

- Build tool (Vite)

- UI library (React)

- Routing library (React Router)

- State management library (TanStack Query)

- Form library (Formik)

And there's more but those are the basics. You're pretty safe choosing the tried and tested libraries, like the ones i've listed here, but there's a lot of hype around libraries, and it's easy to get distracted by shiny new things.

SPAs only work on powerful computers with high speed internet

I think these points are mostly just rumours. I've tested all my modern react apps on an old 2010 MacBook and everything loads really fast, it feels performant. This is JavaScript, not Flash.

As for internet speed, SPAs are really great when you have a bad-slow internet connection. If the site isn't cached, and you have to download the initial JavaScript bundle, that can be slow on a slow internet connection. But as soon as that's done, it's the best kind of app to use with a slow internet connection.

If you open up Discord in you browser right now, then completely disconnect your internet, Discord will still work as an offline app. You can still view messages that were already loaded, you can navigate around the app, you can even try sending a new message which will get synced up to the server when your connection is reestablished.

SPAs are the best for user experience, but they add complexity. So what if you don't want to deal with all the complexity?

Less JavaScript, Less Complexity

"JavaScript is a bad general purpose programming language. It's a big ugly messy language."

-- Carson Gross, Creator of HTMX

What if you don't want to maintain a complete frontend SPA? If your entire web app is built with Rails, or Laravel, or C# or <insert your favorite backend language here>, then it's understandable that you might not want to introduce an entirely new JavaScript application into the mix. It's a complexity tradeoff and maybe the better user experience isn't worth the time and energy (and money) required to maintain a good SPA.

In that case, you still have some good options. Rails has Hotwire, Laravel has Livewire, C# has Blazor, Phoenix has LiveView, and everyone else has HTMX. You write little to no JavaScript and just stay in your language of choice, your framework for choice, your happy place.

JavaScript still runs in the browser, but you're not responsible for it. The framework or library abstracts away all the complexities for you and handles the client-side experience. You only need to focus on writing good backend code and HTML.

This can be a really good option because you're potentially reducing the complexity of your app by a lot. You eliminate the overhead of maintaining a complete SPA, while still having a good user experience.

The tradeoff is that you still lose a lot of control over the user experience. An SPA is still the only way to get complete control for the most optimal experience for your specific app. You're giving up control and opinions and letting your another library manage these things for you.

What about the Meta Frameworks (Isomorphic JavaScript)

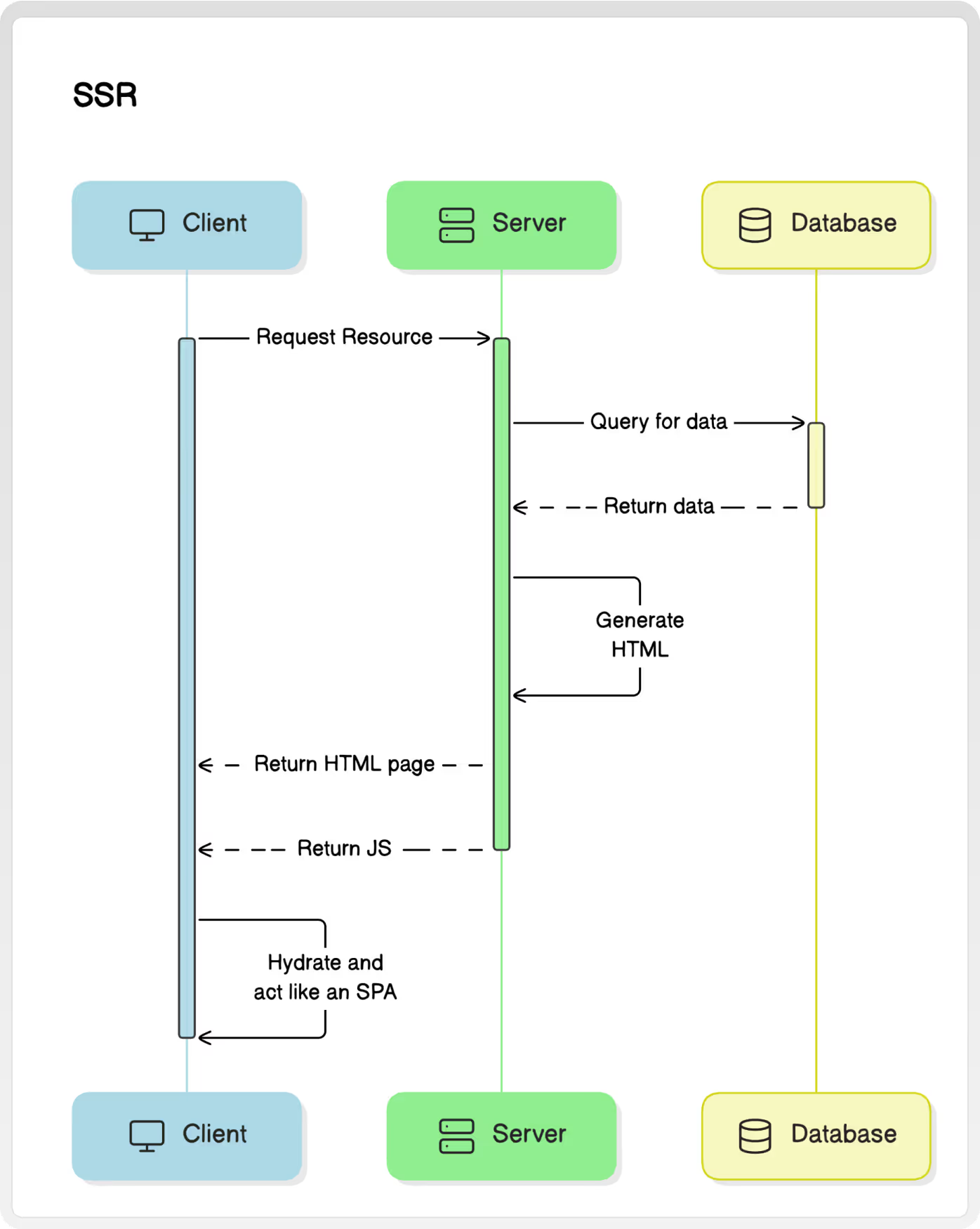

At this point, every SPA framework has it's own meta framework. React has Next, Vue has Nuxt, Solid has Solid Start, etc. These frameworks all take an isomorphic approach to JavaScript where you can render the same components on a server and on the client.

The big win here is that the initial request to the server will download or stream HTML to the client, so the user sees the meaningful page contents more quickly. Then it downloads and runs the client-side JavaScript bundle so it can start acting like an SPA.

If implemented correctly, this sounds ideal. This sounds like the perfect solution. Now your SPA can run in the browser and on the server, bundling CSR and SSR together for the best of both worlds.

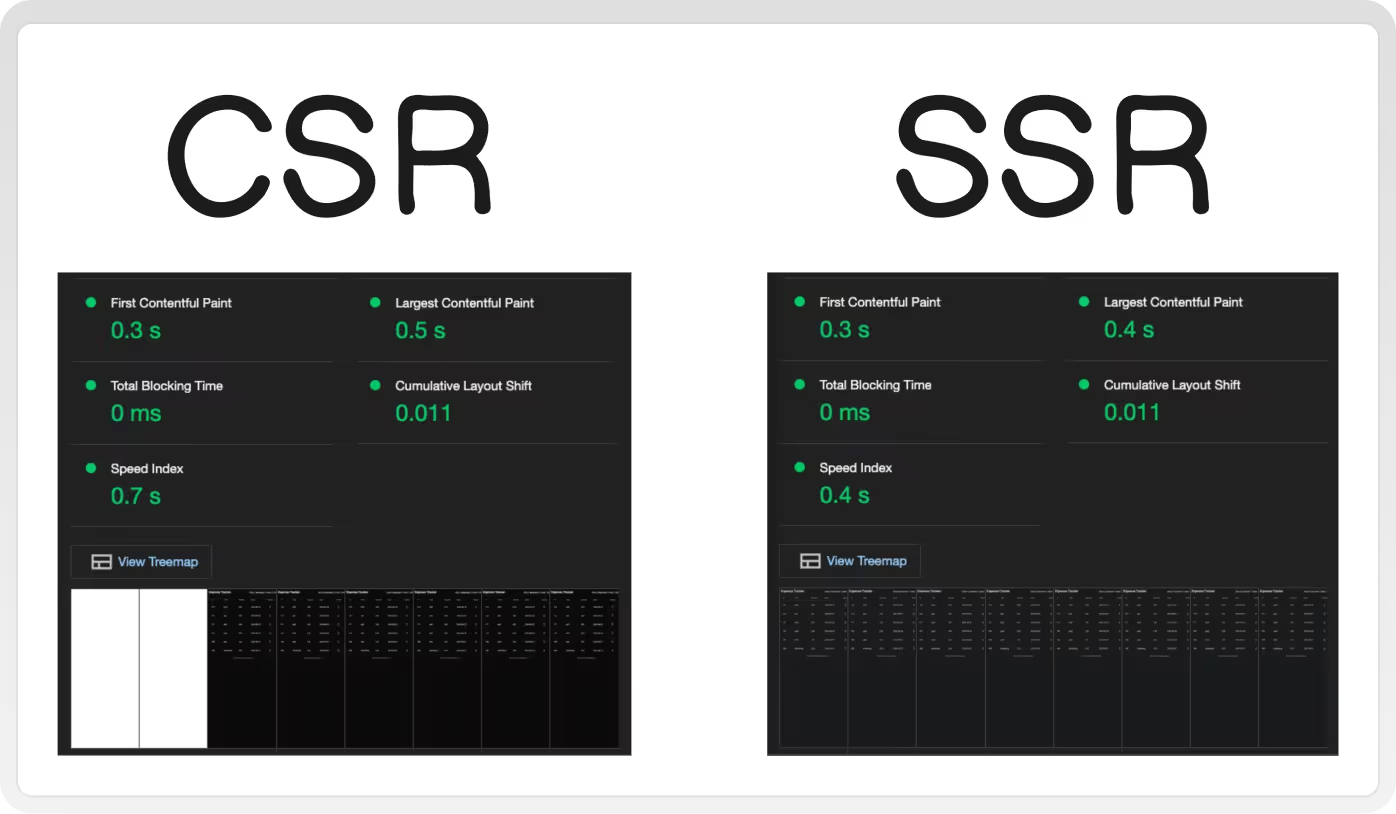

Here's a lighthouse comparison of the same expense tracker app. The left uses CSR with React hosted on AWS CloudFront and Lambda. The right uses SSR with Next.js hosted on Vercel. But in my experience, this is typical out-of-the-box performance with meta frameworks.

SSR is slightly faster to get the initial content because there are fewer network requests and we're not relying on client-side processing. So first page load is faster, good.

These frameworks have also done a really great job at making it easier to get started. Everything just kind of works out of the box and you don't have to think about which tools to use. And if you're willing to pay, Vercel and Netlify will completely take care of hosting these types of apps for you.

So it's easier, faster, newer, what's the problem?

Complexity

Here we go again with complexity. Of course this adds even more complexity, because you now need to add servers that can run the same code as the client. Every single page in your app needs a plan for data fetching and rendering in two completely different environments. If you start to think about all the pieces that have to work together to get this right, it's kind of mind blowing.

The frameworks try to simplify it a lot, but the complexity is still there and foot guns are more abundant. You are writing code in a single code base where there is no completely clear separation of server and client code.

Hosting complexity also becomes an issue because you can't just put your app on a CDN close to the user. You're either distributing servers or functions globally, or causing even longer rendering times to users far away from your servers.

Constraints

To try and make things easier for developers, the frameworks put defaults and constraints in place. But constraints make it more difficult for developers to create a custom experience for the users, and the defaults usually optimize initial page load time over long-term user experience.

One example is that bundle splitting is usually based on routes, which makes a route navigation feel janky:

This is an official example of routing by Next.js, but this always seems to be the default case. In a slow network environment, the feedback to the user is delayed and the page transition feels janky.

Here's a more extreme example of Next's official AI example:

The url change is dependent on the network request, maybe a slow one. If you submit more than one message in a short amount of time, messages just get dropped when the url finally updates. That's a terrible experience for the user.

But this is how the Next.js router works. It's how a lot of SSR routers work. To get complete control of the user experience, you might find yourself working against the framework, and that's a bad developer experience.

Handling routing for a something that has to render the same on the server and on the client is difficult, so there's going to be tradeoffs.

The Communication Problem

This isn't just the normal tech influencers stiring things up on Twitter. React, Next, and Vercel are presenting SSR frameworks as the correct way of doing things, and pure CSR SPAs aren't good anymore.

If you find yourself casually reading the React docs, they will tell you to use a full-stack framework like Next.js or Remix. If you choose to use Next.js, you're immediately opted into React Server Components (RSCs) and told to limit the amount of "client components". Limit the amount of control you have over the user experience.

When the UI gets janky in places, because updating the UI depends on a network connection, you'll be told that new solutions like partial pre rendering (PPR) will solve the problem. Add more server rendering features to solve server rendering problems.

Don't get me wrong, I like RSCs, I like PPR, I think those are both cool technologies that I would opt into using when it makes sense for my app. I just hate how these things are being communicated to us.

The truth is, they are not better, they are just more tools that we can choose to use or choose to ignore.

Final Thoughts

There are always going to be tradeoffs because each approach offers different advantages and challenges. The best thing you can do is to know what you're gaining and what you're losing when you make a decision. No single technology or approach is objectively better or worse, the best solution for you depends on your needs and priorities. Just make sure you're prioritizing the right things, and try to make good apps for your users. Don't forget about your users.

.svg)

.svg)

.svg)